Why the NYT lawsuit won’t save creators

This week: Implications of the New York Times’ lawsuit against OpenAI; key takeaways from a survey of ~3000 machine learning researchers on the future of AI; and 2024 election predictions

The NYT’s lawsuit against OpenAI won’t save creators

The tech and legal worlds are abuzz with discussion of the December lawsuit filed by the New York Times, accusing OpenAI of using the Times’ articles for model training without compensation. OpenAI argues they can do so under “fair use,” a provision under copyright law that allows copyrighted materials to be used in certain limited scenarios like education, newsgathering, and other purposes that don’t commercially harm the original material’s creator. (See our November post “The Armageddon scenario for OpenAI” for a deeper dive into LLM copyright questions.)

Fair use questions are notoriously messy. For every IP lawyer who says OpenAI is protected, you can find one who says the opposite. But there’s one consensus: this is the strongest suit yet against AI developers. It cites numerous clear examples of harms, like consumers using ChatGPT to circumvent the Times’ paywall, and ChatGPT producing “hallucinations” that could jeopardize the Times’ reputation for deep research and accuracy.

Coming from the leading brand in American news, the lawsuit is an aggressive step forward to proactively claim rights for copyright holders in the AI era – but even a favorable outcome for the Times won’t be the change rights holders need to protect their work.

If we as a society conclude that large language models are worth keeping around, perhaps the ideal law of the land would let tech companies access the data they need to create useful models while ensuring copyright holders are compensated with a fair cut of the value created – and are able to opt in/out entirely. There is recent (but imperfect) precedent here – Google/YouTube, Facebook/Instagram, and other large digital platforms aggregate content and compensate the creators for it directly or indirectly (via web traffic, revenue share, or monetizable audiences). In the current version of large language models, creators don’t generally receive traffic, money, attention, or even credit; this puts them in direct competition for revenue with the companies building the LLMs.

So, does the NYT lawsuit move the world closer to this kind of mutually beneficial partnership?

Probably not. To understand why, it’s essential to look at the two possible outcomes of the lawsuit: a settlement and judicial resolution.

Settlement: This case is likely to settle because courts generally prefer that parties reach a resolution themselves, especially in a situation in which both the technology and use cases are evolving so quickly. Media companies are already striking deals with AI developers, but the existing payments for their content are paltry. If the New York Times and OpenAI reach a comprehensive settlement and licensing agreement, they’ll provide a template for other media companies and creators to strike more lucrative licensing deals, but it’s not clear how such an agreement will translate into compensation for other creators like individual artists, who lack the financial and legal resources of the Times.

Judicial resolution: Without a settlement, this case will take at least a decade to resolve. By that time, AI technology (and the world) will be so substantially different that the suit will be effectively irrelevant. In that multiyear period, AI companies will continue to hoover up copyrighted data, and creators will be left without compensation for their work.

The only medium-term recourse for the vast majority of copyright holders is a political fix. Both the Constitution and federal law clearly establish protections for creators. When the law fails to protect them in time, responsibility falls to Congress.

Public sentiment favors creators. In March 2023, Data for Progress found that 70% of likely voters were concerned about technology companies profiting off their users’ data. Polls have also consistently found strong support for AI regulation amongst the public as well as widespread fear of AI’s possible impact on jobs.

Similar bipartisan sentiment is shaping up in Congress; at a Senate hearing this week on AI’s impact on journalism, senators from both parties argued that tech companies had both a moral and legal imperative to pay creators for their work if used to train models.

Unfortunately, this still isn’t much of a reason to be optimistic about political movement on AI copyright in favor of creators. Most individual creators lack organized muscle in DC, unlike major media companies which use the News Media Alliance (the trade association representing ~2000 newspapers in the US and Canada) to push for policies that benefit themselves.

On the other side, OpenAI has already engaged in a lobbying spree, corralling academics, think tanks, and public interest groups to argue specifically that Congress should leave AI copyright policy up to the courts.

Without a well-organized force to lobby in favor of creators, and facing the ambient gridlock in Congress, tech companies are likely to get what they want.

* Special thanks to IP lawyer, professor, and former Connecticut State Senator Bill Aniskovich for his input.

Machine learning researchers predict the future

It’s been just over a year since ChatGPT’s release. Given the rapid evolution of generative AI technology and its step change in functionality relative to older tools, it’s reasonable to be anxious about what the next few years will bring. The AI regulatory discourse often switches rapidly (and confoundingly) between near-term risks like political deepfakes and long-term ones like “Skynet” AI takeovers of society.

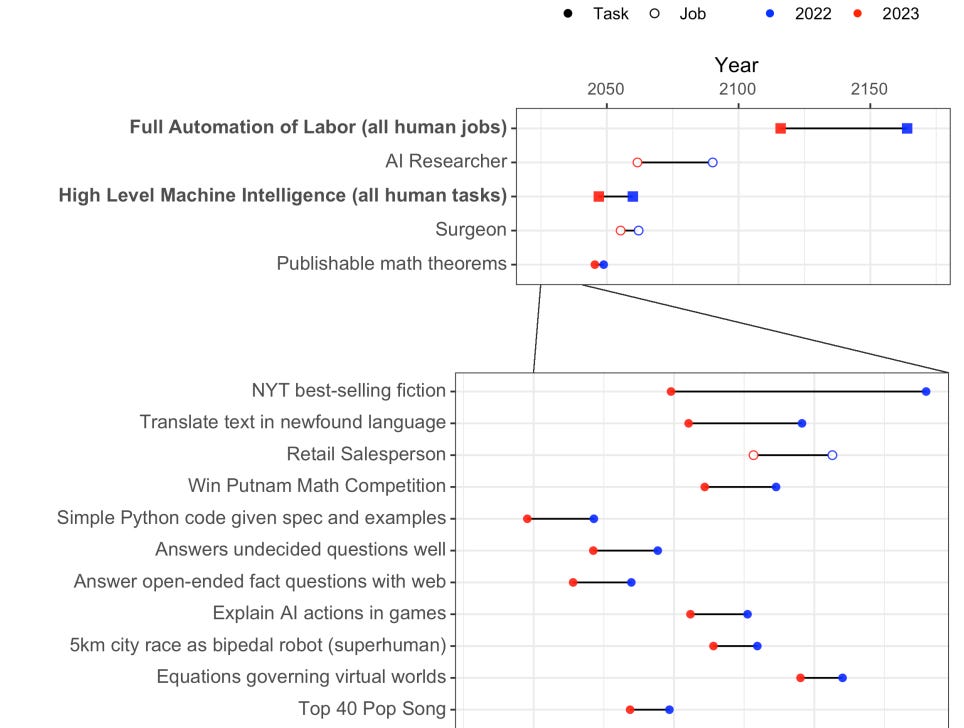

Amongst such a vast array of concerns, concrete guidance to help regulators prioritize specific initiatives is welcome. One such dataset was released this week by the AI Impact Institute, which surveyed nearly 3000 machine learning researchers to gather predictions about the future of AI. The good news: most researchers believe all human jobs won’t be automated away until ~2120, if ever. The bad: the average projected year in which that might happen moved closer by 50 years since the last time the survey was run in 2022.

Key survey takeaways:

The feasibility of machines outperforming humans in every possible task was estimated at 10% by 2027, and 50% by 2047 (10 years earlier than researchers estimated just last year);

Researchers estimated representative jobs like retail salespersons and truck drivers would be automated by the early 2030s, and surgeons in the early 2050s;

Researchers thought that there was at least a 50% chance that within the next ten years, AI would be proficient at tasks including “writing new songs indistinguishable from real ones by hit artists such as Taylor Swift” or coding a payment processing site from scratch;

Only 20% of respondents said that AI will be able to truthfully and intelligently explain its decisions by 2028; and

The vast majority of respondents (~70%) said AI safety should be prioritized “more” or “much more.”

Machine learning researchers are intimately familiar with AI, but they’re not domain experts, and they likely aren’t factoring in any job-preserving protectionist regulations favoring humans over AI. We also wouldn’t take any specific estimate to heart: the value here is more in the relative rankings of possible impacts, and the year-over-year changes in predictions that illustrate how the technology is changing.

Here’s hoping Taylor Swift can hang on for another 25 years.

2024 Election Predictions

In case you missed it, check out our piece in The Information on how AI is likely (or not) to impact the U.S. elections this year.

Of Note

Copyright

OpenAI and Journalism (OpenAI) OpenAI’s response to the New York Times’ copyright lawsuit

How Adobe is managing the AI copyright dilemma, with general counsel Dana Rao (The Verge)

Microsoft, OpenAI sued for copyright infringement by nonfiction book authors in class action claim (CNBC)

This AI Deal Will Let Actors License Digital Voice Replicas (CNET)

Campaigns, Elections and Governance

California’s budget deficit and reluctance from Gavin Newsom could limit AI regulation (San Francisco Chronicle)

This AI Deal Will Let Actors License Digital Voice Replicas (CNET)

State Legislators, Wary of Deceptive Election Ads, Tighten A.I. Rules (The New York Times)

Hochul’s plan for AI in New York (Politico)

Technology

Duolingo cut 10% of its contractor workforce as the company embraces AI (Techcrunch)

GenAI could make KYC effectively useless (Techcrunch)

Samsung Delays Production at New US Factory to 2025, Daily Says (Bloomberg). TSMC has postponed production at Arizona fab to 2025; US chip ambition is plagued by permit and subsidy issues.