Trump voters fear an AI election

And bipartisan efforts to regulate AI in federal and state governments make progress

This week, meaningful bills came out of the Senate and California legislature, and Americans expect AI-driven misinformation in the 2024 elections.

We have one request this week: do you have a friend or colleague who might be interested in subscribing? Forward this over!

Bipartisan federal action on AI is taking shape

The most important development this week may have not been Schumer’s CEO-heavy AI Forum event, but instead Sens. Blumenthal (D-CT) and Hawley’s (R-MO) “Bipartisan Framework for U.S. AI Act,” which is an intriguing federal policy proposal for non-electoral AI regulation. Blumenthal and Hawley are the Chair and Ranking Member of the Judiciary Subcommittee on Privacy, Technology, and the Law. Coming out of the AI Forum, Sen. Blumenthal noted the broad agreement from industry and labor on many points, and the framework covers quite a few: users should know they’re interacting with an AI system, generated media should be watermarked, consumers should be protected from harm, models should require safety evaluations, and more.

One area of ongoing debate is whether sophisticated AI models should require licensing to operate. The framework proposed favors licensing; the devil will be in the details. Licenses are favored by industry incumbents like Microsoft and OpenAI and opposed by proponents of open source software like Meta and Hugging Face.

In addition to the promising framework from Blumenthal and Hawley, Sen. Amy Klobuchar (D-MN) and a bipartisan group of senators have introduced the most significant AI elections bill to date. It would ban deceptive AI-generated content in elections, some issue advocacy, and political fundraising, ending the ongoing debate at the Federal Elections Commission about whether or not they have sufficient legal jurisdiction to regulate AI in election advertising.

This bill is one in a series of AI advertising bills Sen. Klobuchar has championed, including the ‘Honest Ads Act,’ which closes a loophole related to disclaimers on certain types of online political ads, and the ‘REAL Political Ads Act,’ that would require a disclaimer on ads that use AI-generated media. These are table-stakes measures with little downside, but we’ll see if they pass in time to be enforced for 2024.

Americans, especially Republicans, expect AI misinformation in the 2024 elections

This week’s federal regulatory developments highlighted the bipartisan nature of support for AI regulation in Washington, and a new Morning Consult poll reinforces that it extends to the American public, too, with strong undercurrents of concern about how AI will impact the next election cycle:

53% of respondents said misinformation spread by AI will impact who wins the 2024 election, a view that was shared across Fox, CNN, and MSNBC “frequent watchers.”

Fully one-third of respondents said AI would result in less trust in election results.

Trump supporters were twice as likely as Biden supporters (47% vs 27%) to expect that AI will decrease their trust in 2024’s election results

The tilt in distrust from Trump supporters is a worrying sign for election integrity. 2020’s election fraud claims frequently cited hacking, technological interference, etc., as justification without evidence — throwing AI into the mix will be an easy villain in December 2024.

AI policy is gathering steam in California with support from labor

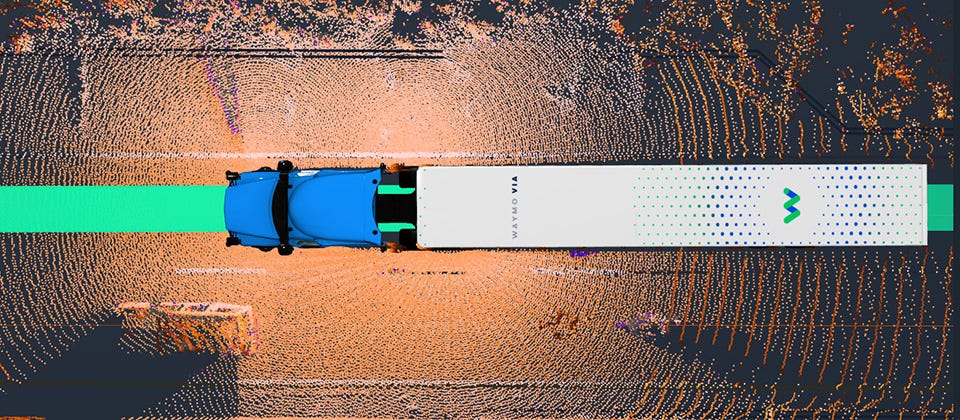

There’s also big news out of California, with a driverless truck ban overwhelmingly passing the legislature but headed for a gubernatorial veto, and two other significant regulatory efforts taking shape in the Assembly and the State Senate for 2024:

AB-316 overwhelmingly passed the legislature and would effectively ban driverless trucks until 2029 or at least 5 years after testing begins (whichever is later). It’s headed to Gov. Newsom’s desk, who is expected to veto it. Per TechCrunch, the CA DMV currently does not allow testing of self-driving trucks, although it does have the authority to do so; this bill would snatch away that authority from the DMV and give it to the legislature itself, where a labor-friendly Democratic supermajority would ensure that self-driving trucks do not threaten the Teamsters.

AB-459, introduced by Assembly Member Ash Kalra (D-25), would invalidate all past and future contracts that an actor may have signed that would use their voice or likeness to create a digital replica or train a generative AI system. This bill also enjoys strong support from labor, specifically SAG-AFTRA, representing actors and other entertainment industry workers.

SB-294, introduced by Sen. Scott Weiner (D-11), is an AI “safety framework” focusing on testing and security of AI models, third-party auditing requirements, and, importantly, liability for companies and actors that fail to take safety precautions. It’s an intent bill, meaning that it’s not eligible for legislative consideration but intended to generate discussion and more concrete legislation in the next session. Sen. Weiner represents San Francisco and environs, so it’s not surprising to see a more technical AI bill come out of his office, and we expect to see him lead on future legislation.

California is well positioned to lead on AI regulation due to its industry proximity and track record history of pioneering legislation that shapes federal standards — not to mention the immediate relevance to many of it’s citizens (Hollywood and self-driving cars are here!). While AB-316 may be largely political posturing, AB-459 and SB-294 have the potential for real impact.

Of Note

Copyright

The FAIR Act: A New Right to Protect Artists in the Age of AI (Adobe) Adobe proposes that Congress allow artists to sue for damages those who “intentionally and commercially” impersonate an artist’s work or likeness via AI tools.

Amazon to require some authors to disclose the use of AI material (AP)

One day after suing Open AI, Michael Chabon and other authors sue Meta (San Francisco Chronicle) A group of authors have filed federal lawsuits against OpenAI and Meta for training their models on the authors’ work without their consent. They’re seeking class-action status.

Other

Artificial intelligence technology behind ChatGPT was built in Iowa — with a lot of water (AP) AI has a huge environmental footprint; this article quantifies how much water it takes to cool data centers powering new AI models.

Sweden brings more books and handwriting practice back to its tech-heavy schools (AP) Sweden, a leader in childhood education outcomes, has taken steps to reduce the reliance on technology in classrooms, citing significant declines in reading ability and “clear scientific evidence that digital tools impair rather than enhance student learning”. With all the recent discussion of how AI will and should impact education, it’s an interesting development.

The law firm acting as OpenAI’s sherpa in Washington (POLITICO) - DLA Piper, a well-known law firm with deep DC expertise has been advising Sam Altman and helping OpenAI as they attempt to woo lawmakers.

Google's $20M for responsible AI (Axios) Google's philanthropic arm is investing $20 million in a new Digital Futures Project and an accompanying fund designed to help ensure AI reaches its promise and avoids potential pitfalls.