This week, a Trump campaign advisor deepfakes an NBC News anchor; Microsoft courts labor, and European policymakers agree on the broad strokes of the EU AI Act but the details are still vague

Did a friend or colleague forward this to you? Welcome! Sign up above for a free weekly digest of all you need to know at the intersection of AI, policy, and politics.

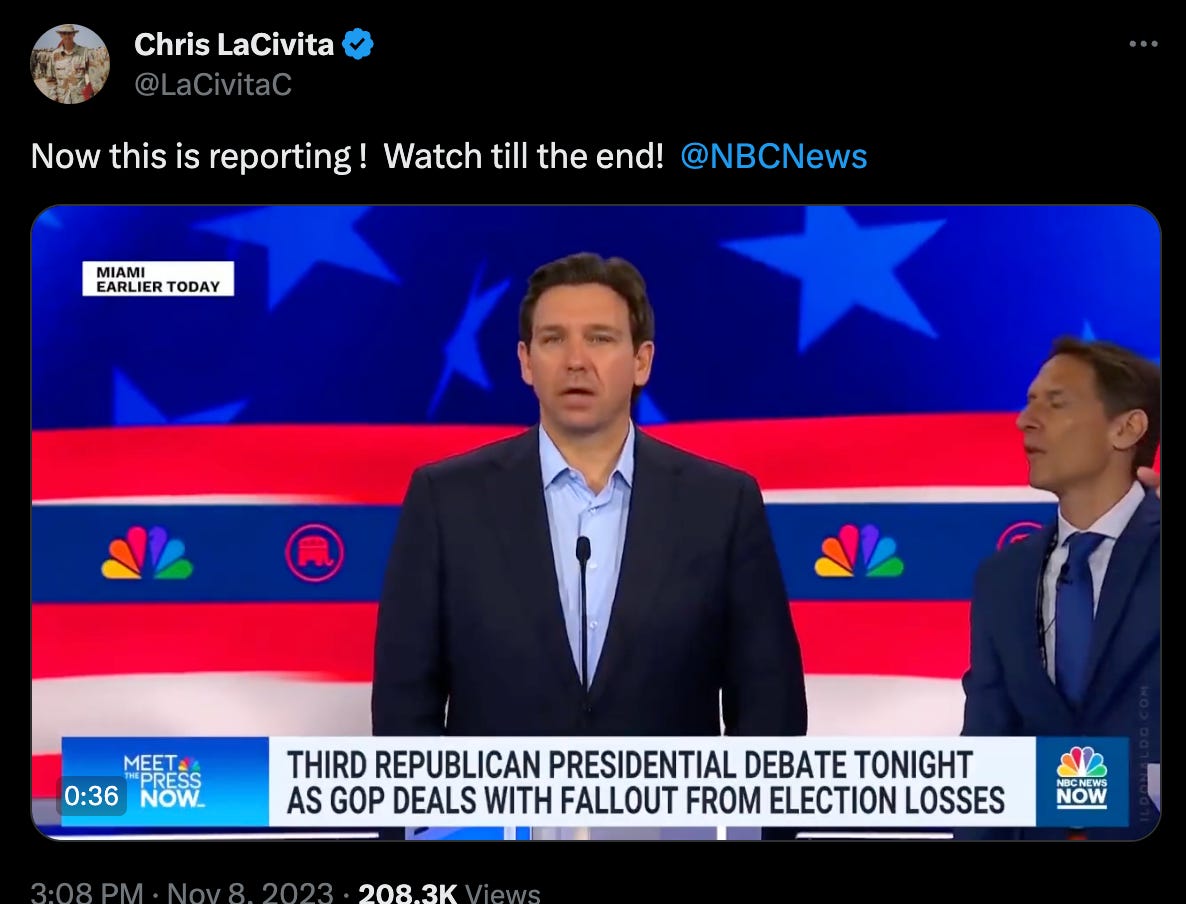

Trump campaign advisor promotes a deepfake parody

This week, a senior Trump advisor posted a parody deepfake - but it’s not what you might expect.

The video, initially shared by Chris LaCivita, showed real footage of NBC’s political correspondent Garrett Haake discussing preparations for the most recent GOP debate. In the middle of the clip, the audio imperceptibly switches from Haake’s real voice to a synthetic one, poking fun at the irrelevance of the candidates and boosting Trump at the end. The voice is indistinguishable from Haake’s real voice, but the video is clearly a parody.

This video is interesting for two reasons:

There’s a new dimension of “hybrid” deepfakes to consider

We’ve written about how synthetic audio is easier to create, more believable, and less falsifiable than AI-generated video, but this combination of real and AI-generated elements represents an emerging threat. The campaign didn’t have to doctor the visuals at all, simply dubbing over the back half of the video with a synthetic voice to produce a clever and convincing “deepfake video” with little work required.

It’s easy to imagine how this could be used in more nefarious ways to convince people of a fake message without mucking around with generative video. Other kinds of hybrid deepfakes are likely to be convincing too; for instance, mostly real images with minor AI-generated modifications intended to deceive.

The target of the deepfake isn’t a well-known politician, but a representative of a trusted brand

There’s been lots of hand-wringing about potential deepfakes of presidential candidates, but almost no discussion of deepfakes of other public figures (like journalists) that could have an equally large impact on misinformation. With trust in news sources at historic lows, deceptive deepfakes of journalists could further erode public trust in addition to impacting public opinion on whatever the deepfake aims to shape.

As of the writing, NBC News has demanded LaCivita remove the video, but it remains posted on his Twitter/X account. The FTC is clear about the illegality of using deepfakes for fraud and deception, but political parody is likely to have strong First Amendment protections. While the provenance in this situation is clear, the bigger risk is anonymous releases that can’t be traced quickly or effectively, and those that aim to actively deceive instead of merely poke fun.

Microsoft finds a strategic friend in the AFL-CIO

This week, Microsoft announced a deal with the AFL-CIO, the largest federation of labor unions in the country. The deal is the first of its kind between a tech company and a labor organization that is focused on AI. Microsoft pledged to remain neutral in any attempts of their workers to unionize, and agreed to work with the AFL-CIO on AI via a three-pronged approach: 1) creating a mechanism for labor to offer feedback to AI developers; 2) providing AI education to labor leaders and workers; and 3) jointly developing mutually beneficial policy proposals.

To understand why a tech company is courting a labor union, it’s useful to look at a recent tech policy battle: social media. Part of the reason federal social media legislation hasn’t gone anywhere is because outside of a few scattered nonprofits, there’s little organized outside opposition to big tech companies on the topic. This leaves tech lobbyists free to roam the halls of Congress without pushback from opponents. As a result, tech companies have successfully fended off any social media regulations in D.C., and that looks to hold true at least for the next few years.

The regulatory battle over AI will likely be a very different story, because according to many analyses, many types of jobs will be eliminated – which is obviously of concern to labor unions. Union membership and political power has declined over the decades, but unions still have enormous sway within the Democratic party and in blue state capitals. If humans and AI are going to compete for jobs, workers do have one advantage over AI – only humans are allowed to vote, and will in all likelihood vote to elect policymakers who will protect their jobs.

If labor unions come out in force against AI, there are likely to be many anti-AI laws that come out in states where unions are strong – like California. We’ve already seen labor flexing its muscles in Los Angeles, as the Writers’ Guild successfully negotiated terms restricting the use of AI in the deal that ended the writers’ strike.

Microsoft knows full well that if labor unions organize to pass anti-AI bills, it will have a lot to lose, which explains why it’s creating partnerships with powerful labor groups like the AFL-CIO ahead of job losses credited to AI. The AFL-CIO, meanwhile, is eager to show leadership and relevance as union members nervously watch AI’s ascent, and to possibly expand its membership by unionizing tech workers themselves.

The AI regulatory landscape will be shaped in large part by the alliances and fights between tech companies and labor unions over the next few years. We will be watching closely.

The EU AI Act moves forward, but details are still hazy

EU policymakers agreed on a preliminary draft of the EU AI Act after 40 hours of negotiations late last Friday evening. It’s a big milestone for the first major AI legislation in the Western world, arriving more than two years after the first draft of the Act was released in April 2021. Policymakers continue to hammer out details ahead of a full EU Parliamentary and EU Council vote, but the broad points are known:

AI Technology Classification: AI technology is classified into several different categories (minimal, high, unacceptable) that will dictate whether and how they’re able to be deployed. An example of a minimal-risk AI system is a spam filter; unacceptable risk systems include “social scoring” systems and using facial recognition for policing work outside of national security applications.

Transparency Requirements: The Act specifies transparency requirements that require disclaimers for chatbots, AI-generated content, and any systems that use biometric categorization. Details are still vague but there will also likely be some kind of watermarking requirements for developers of generative AI.

“General purpose” Model Regulations: The Act supposedly mandates testing and risk management requirements for general purpose models like GPT-4. In addition, models requiring training compute beyond a set threshold will be considered as posing a “systemic risk,” subject to additional rules.

Most of the provisions in the Act won’t kick into effect until between six months and two years of the final passage, which means there will be very little impact until ~2025. The regulations apply to anyone deploying the technology in the EU, but don’t restrict developers based in EU member states from deploying prohibited technology or use cases elsewhere. While enforcement mechanisms are still murky, fines for violations are significant – up to 35 million euros or 7% of global annual revenue for the most egregious violations.

Here’s the catch – there aren’t many more details available yet. Without the actual text, it’s difficult to weigh in on the merit of the specific regulations. What we do know, however, is that the less contentious elements like banning social scoring have been in the works for several years, and are likely acceptable to technology companies and member countries alike.

The more contentious regulations for “general purpose” or foundation models were tacked on late, prompted by the rapid rise of LLMs. These have been largely opposed by both US and EU-based tech companies, and even by French President Emmanuel Macron. Macron, in particular, argued that Europe is already behind the US and China in developing AI technology, and early strong regulation would only ensure that this situation remains the case.

The Act text should be available sometime in January, at which point we’ll take a deeper look at the details and likely impact.

Of Note

Technology

FunSearch: Making new discoveries in mathematical sciences using Large Language Models (Google DeepMind)

Science Is Becoming Less Human (The Atlantic)

Weak to strong Generalization (OpenAI) A new research direction for superalignment.

CBS Launches Fact-Checking News Unit to Examine AI, Deepfakes, Misinformation (Variety)

Axel Springer and OpenAI partner to deepen beneficial use of AI in journalism (Axel Springer)

The Rise of ‘Small Language Models’ and Reinforcement Learning (The Information)

Dream of Talking to Vincent van Gogh? A.I. Tries to Resurrect the Artist. (The New York Times)

Campaigns

Inside the Troll Army Waging Trump’s Online Campaign (The New York Times)

This Congressional Candidate is using AI to have conversations with thousands of voters (Forbes)

Deepfakes for $24 a month — how AI is disrupting Bangladesh’s election (Financial Times)

Governance

Experts on A.I. Agree That It Needs Regulation. That’s the Easy Part. (The New York Times)