TikTok gets authentic

And Anthropic proposes a risk framework modeled on lab biosafety classifications

This week, TikTok adds support for labeling AI content, Anthropic and OpenAI take some baby safety steps, and John Grisham sues OpenAI.

Do you have a friend or colleague who might be interested in subscribing? Forward this over!

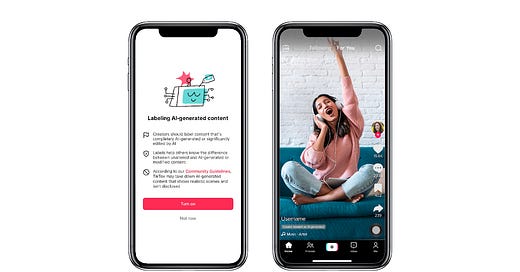

New AI labeling features come to TikTok

This week, TikTok released a new tool that allows users to label AI-generated content that they post. These labels are intended to make it easier for users to comply with TikTok’s Integrity & Authenticity policies, which require disclosure of any synthetic or manipulated media. The new tool requires users to self-label content, but the blog post states that TikTok will soon start detecting and labeling AI-generated content automatically. While details of this nascent AI detection technology aren’t available, TechCrunch reports that TikTok is “assessing provenance partnerships designed to help platforms detect AI” – presumably reading watermarks within AI-generated media, like Google’s SynthID.

We previously wrote about how standards like C2PA will be essential in increasing transparency of AI-generated content and curbing the spread of misinformation, but it’s essential to get labeling policy right if it’s going to have a meaningful impact. If major model developers get on board and provide content watermarks detectable by social networks, it won’t obviate the risk of bad actors finding open source models that don’t subscribe to provenance standards, but it might increase the friction to distribute deepfakes and reduce the amount of misleading content.

On the other hand, inconsistent labeling (whether opt-in or through most-but-not-all watermarking) might actually leave users more vulnerable to being misled by synthetic or manipulated content that isn’t labeled as such, because they now have the expectation that platforms label all AI-generated content for them. The product designers for TikTok and other social media platforms will have to think through these implications very carefully, particularly if they plan to release these labels ahead of the 2024 general election season.

There is a lesson to be learned from the history of browser security and UX design. Because the web’s original protocol, HTTP, is insecure, there has been a multi-decade effort to replace it with HTTPS, its secure counterpart. For many years, browsers would tolerate both HTTP and HTTPS sites, but commonly flash a huge warning sign if a website’s HTTPS certificate was incorrectly configured. These alerts had the unintended consequence of users trusting unencrypted HTTP websites, leading to an increase in cybersecurity attacks like SSL stripping attacks.

It wasn’t until 2018 that Google started showing “not secure” warnings for all HTTP sites on the web – almost 25 years after the invention of HTTPS. That interim period, with trusted and untrusted websites treated more or less equally by a user of a web browser, was fraught with peril for ordinary users. An extended period of time with a similar situation for AI labeling – some, but not all, synthetic content receiving a label on social media – will have the same risks.

Anthropic attempts to wrangle AI risk

The long-term safety of AI models can be difficult to reason about because, in part, a disaster hasn’t happened yet. Anthropic released its “Responsible Scaling Policy,” detailing how it thinks about AI-driven risks to society, and how they might escalate from here. For example, simple AIs pose absolutely no risk; models like ChatGPT pose enough risk to require safety evaluations, testing, and other measures, and future advances will require more.

Anthropic modeled its four AI safety levels off the Biosafety Level system in use for laboratories (e.g. a BSL-4, the highest security version, is required to work with a pathogen like Ebola or smallpox).

In the US, BSL-3 and 4 labs are regulated by either the CDC or USDA due to the risks associated with them. If the risks of AI are comparable or greater than biohazards like smallpox (and most prominent researchers agree they are), it’s unwise to leave this classification and operation up to the companies themselves. Politically, Anthropic’s release of this document may reflect both internal safety aspirations as well as a vehicle to help publicly define risks and safety measures in terms that work for it.

For its part, OpenAI opened a channel for outside experts in fields like politics, biology, physics, etc., to sign up to help “red team” their models, meaning testing for known adverse consequences in advance of release. The list of expertise sought includes persuasion, political science, mis/disinformation, and political use. OpenAI has red-teamed its models in the past so it’s not a new approach, but it's a welcome development to see them soliciting additional subject matter expertise.

Of Note

Government

President Biden warns the UN to 'make sure' AI does not ‘govern us’ (Fox Business) - “Countries must cooperate to govern artificial intelligence responsibly before “AI governs humanity."

Ted Cruz Says ‘Heavy-Handed’ AI Regulation Will Hinder US Innovation (Bloomberg) - Ted Cruz says the US should avoid a “heavy-handed regulatory regime”, citing China’s progress as well as the potential economic benefits of AI.

AI Policies Under the Microscope: How aligned are they with the Public? (AI Policy Institute) - Analyzing how the specifics of AI proposals align with public opinion polls.

Sen. Young: Next AI forum will take place in October (Axios) - The second in a series of AI forums hosted by Congress is scheduled for next month, and won’t be as CEO-studded as the first.

U.K. Chancellor Jeremy Hunt defends China’s invitation to AI summit (POLITICO) - The UK plans limited Chinese participation in the UK's AI summit, despite US and EU concerns.

Harnessing Artificial Intelligence (Will Hurd) - Will Hurd, who is a long-shot GOP candidate for President, released an AI policy pillar for his campaign. Hurd served on OpenAI's board from 2021 until 2023.

Copyright and Creative

Franzen, Grisham and Other Prominent Authors Sue OpenAI (New York Times) - A group of prominent novelists are joining the legal battle against OpenAI.

Do Studios Dream of Android Stars? (New York Times)

Other

A.I. and the Next Generation of Drone Warfare (The New Yorker) The US military is developing “swarming” AI drones.

Centaurs and Cyborgs on the Jagged Frontier (One Useful Thing) A Wharton professor and multidisciplinary team analyzed the impact of ChatGPT on management consultant workforce performance with some expected findings and one interesting conclusion about how overreliance on AI can backfire and result in lower quality work.

How to Tell if Your A.I. is Conscious (The New York Times)

Reporting on nascent neuroscience/philosophy efforts to empirically measure consciousness using integrated computational theories, and the potential to apply this understanding to AI systems.AI Chatbots Are Invading Your Local Government—and Making Everyone Nervous (WIRED) Government agencies are implementing interim policies on using ChatGPT and similar AI, concerned about transparency laws, inaccuracy risks, and private sector interests. As we’ve chronicled, issues have occurred involving the EPA, State Dept., San Jose, an Iowa school administrator, and more.