OpenAI announces their 2024 election plans

As this year’s election season kicks into full swing, with recent major elections in Taiwan and Bangladesh along with the start of the U.S. presidential primary in Iowa, OpenAI released their public approach to global elections. They lay out three initiatives: (1) Preventing abuse; (2) Transparency around AI generated content, and 3) Improving access to authoritative voting information.

The most important takeaways:

1. OpenAI doesn’t want to be Facebook in 2017

The most prominent component of OpenAI’s announcement is a prohibition on the use of its AI software for political purposes. OpenAI has obviously learned a lesson from Facebook/Meta’s post-election woes in 2016, when the social media platform was excoriated for allowing disinformation to spread widely. Executives were subsequently hauled in front of Congress to testify about Facebook’s role in the elections, and the company suffered a major blow to its public image.

Getting ahead of any election-related scandals by banning political usage, eleven months ahead of the general election, is a savvy move. Now, if there’s an election-related incident with bad actors using ChatGPT or DALL-E (OpenAI’s image generation tool), OpenAI can point to its terms of service and shift blame to the rogue actor.

Enforcement is another story. While a meddler would currently find it tough to get around DALL-E’s prohibition on generating fake images of specific people, it’s unclear whether OpenAI even has the ability to enforce a ban on generating campaign materials using ChatGPT. Ask ChatGPT to generate a political fundraising email, and (as of this writing) it dutifully complies. OpenAI has no way of knowing if such generated text is used for compliant entertainment or research purposes, or will actually be dropped into campaign mailers; surely, there are existing campaigning apps built on top of OpenAI’s APIs and hundreds of campaign staffers and lobbyists using ChatGPT every day.

OpenAI also announced that ChatGPT will start to include links and attribution to news sources in its responses to users asking about current events. This feature is welcome, but also introduces additional risk: new research shows that when LLMs cite sources, users are actually less likely to check the validity of the output, even though the LLM could be “hallucinating” the content or the citation. When it comes to election information, that’s not a desirable outcome.

On the voter education side, ChatGPT queries related to voting will direct users to CanIVote.org, a site that directs users to voting information for their own state. Large campaigns and advocacy organizations typically avoid using these state websites, which often have confusing interfaces and inconsistent data updates. Mature digital platforms like Google and Bing instead directly give users actionable information like polling place locations and hours via licensing data from Democracy Works, a 501c(3) that focuses on providing this exact data. It wouldn’t be surprising to see OpenAI’s approach evolve into something similar.

2. It’s a small step forward for content provenance standards

As part of its transparency initiative, OpenAI committed to using Content Provenance and Authenticity (C2PA) standards by adding metadata to identify images generated by DALL-E. This is potentially the most impactful part of the announcement, even if it’s unlikely that DALLE–generated images will play much of a role in elections this year.

The C2PA is an industry group building a cryptographically verifiable standard for content authenticity. Their objective is to allow distributors and consumers to verify the provenance of a media source and see the log of edits, thereby helping users reliably distinguish between synthetic (or edited), and non-synthetic (real) content. Some tools like Photoshop support C2PA as a beta feature, but since there are no current benefits (such as increased distribution on social media) for publishers to integrate C2PA into their content pipelines, adoption is in its infancy.

OpenAI’s commitment to voluntarily labeling synthetic images using C2PA is a small but high-profile step forward for the industry effort, and helpful for broader awareness of content provenance standards. However, since open-source models continue to proliferate, labels on synthetic content by tools like DALL-E will do little to stop motivated bad actors from generating and distributing bogus images and trying to pass them off as authentic. Only comprehensive commitments by many more companies involved with media capture, editing, and distribution – and nation-states to provide carrots and sticks for compliance – will solve the looming epistemological crisis presented by deepfakes.

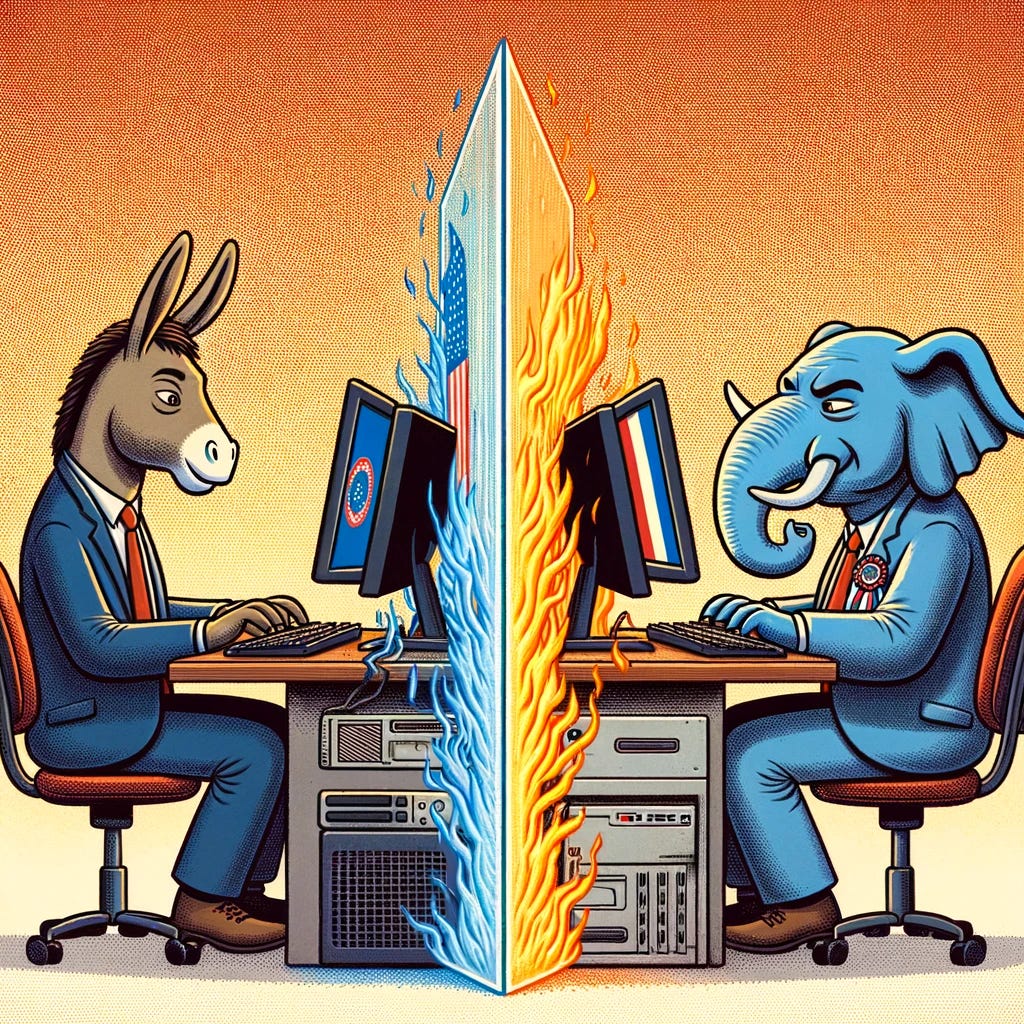

3. The future of political AI is partisan models

If OpenAI actually does end up meaningfully restricting campaign use of its AI tools – and even if they don’t – a partisan political AI arms race will likely emerge, where progressive- and conservative-oriented campaign tech companies will develop models for exclusive use by their own side.

There’s plenty of precedent here; the vast majority of campaign software is partisan (ActBlue/WinRed; NGPVAN/i360; Hustle/OpnSesame) due to concerns about data privacy and anxiety about spending dollars with a vendor that can turn around and share best practices with political opponents.

There are already a handful of political tech businesses that have sprung up to take advantage of AI’s potential, and more will emerge. They’ll likely use open source models fine tuned with political data like poll-tested messages and fundraising emails with performance data. Even if campaigns don’t use bleeding-edge closed source models like GPT-4, they’ll be sufficient for the essential campaigning tasks: writing email and SMS messages, creating social media posts, and ad production.

—

Overall, this announcement was a PR win that lets OpenAI demonstrate a bit of maturity after the governance chaos a few months ago. OpenAI knows it’ll be scrutinized as the 2024 elections play out and in the absence of regulations, it’s in the company’s own interest to make unilateral changes that are both performative (terms of use updates) and substantial (C2PA tags). In the meantime, both parties will start building out campaign tools using custom models that they can control. The future of political AI is decentralized and partisan.

Of Note

Government

House Lawmakers Unveil No AI FRAUD Act in Push for Federal Protections for Voice, Likeness (Billboard)

California launches new broadside against tech over harmful AI content (Politico) The bill goes after the creators and distributors of AI-generated depictions of child sexual abuse.

UK government to publish ‘tests’ on whether to pass new AI laws (Financial Times)

Industry

Mark Zuckerberg’s new goal is creating artificial general intelligence (The Verge)

Generative artificial intelligence will lead to job cuts this year, CEOs say (Financial Times)

Google DeepMind’s new AI system can solve complex geometry problems (MIT Technology Review)

Democratic inputs to AI grant program: lessons learned and implementation plans (OpenAI)

AI poisoning could turn open models into destructive “sleeper agents,” says Anthropic (Ars Technica)

ChatGPT’s FarmVille Moment (The Atlantic)