This week, Meta and Google rolled out new policies restricting generative AI in political advertising, but the most consequential ad platforms lack similar restrictions; Biden uses the EO to increase friction for foreign actors seeking AI access; and we won’t be able to tell the difference between real and AI voices soon.

Did a friend or colleague forward this to you? Welcome! Sign up above for a free weekly digest of all you need to know at the intersection of AI, policy, and politics.

Big tech gears up for election deepfakes; where’s TV?

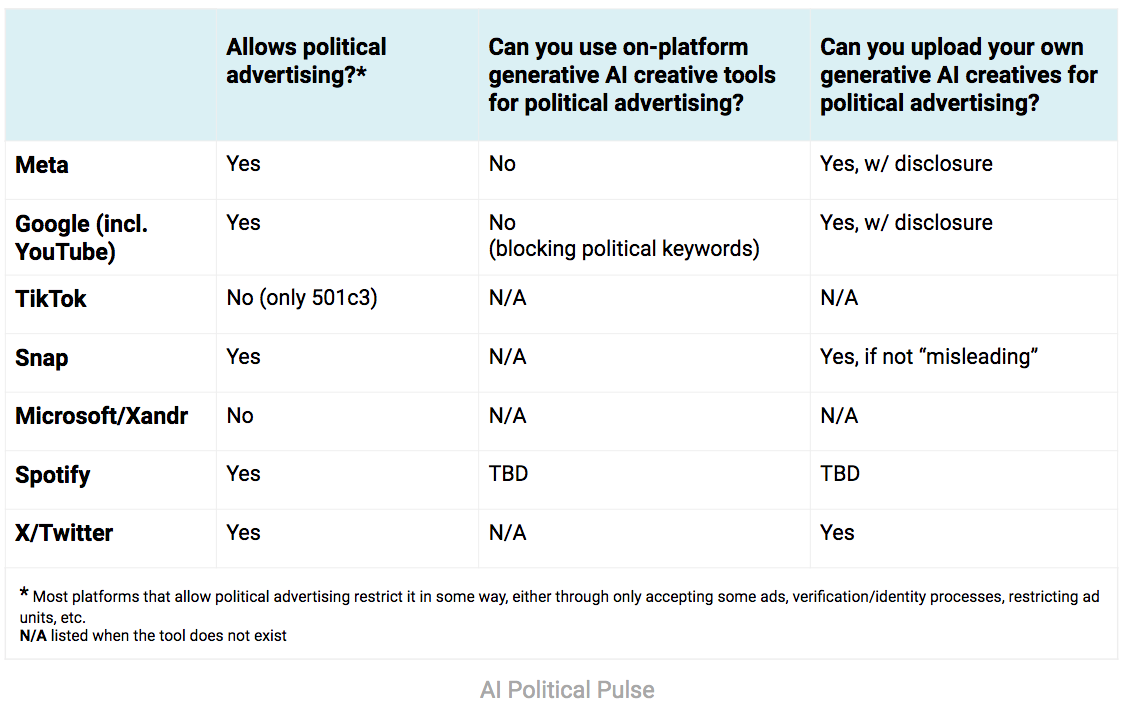

A year out from the November 2024 elections, major digital advertising platforms like Meta and Google are rolling out new policies for the use of synthetic content in political ads.

Specifically, Meta has banned the use of its new generative AI advertising tools in political ads and announced new disclosure rules for political ads that contain any of the following:

Synthetic content that depicts real people saying things they didn’t say or do;

Realistic-looking people or events that are not real;

Manipulated footage of real events;

Depictions of realistic events that allegedly occurred through media that is “not true”

Google plans to update their ads policies to require similar disclosures, and Microsoft announced measures intended to reduce election-related threats and endorsed the bi-partisan “Protect Elections from Deceptive AI Act” Senate bill.

The policies are good initial steps for these highly visible platforms, effectively creating a self-governing regime to mitigate deepfake risks. However, synthetic political content can still be distributed over many channels that lack clear policies, especially broadcast and cable television, which still account for the largest amount of overall political ad spend, and streaming video platforms like Hulu and Tubi, which are quickly growing. Political ad tracking company AdImpact expects political advertisers will spend almost $9 billion on traditional and connected television in the 2024 cycle – 7.5x more than the total expected spend on Meta and other digital advertising platforms like Google and X/Twitter.

The case for synthetic content policies is even stronger here because of these platforms’ immense reach and influence, but the politics are different. Broadcast/cable television and streaming video platforms don’t yet face the same pressure as Meta and to a lesser extent, Google and Microsoft, to be on top of emerging AI threats. Without further federal and/or state regulation, it will remain a confusing patchwork of rules and the most consequential platforms will lack guardrails around AI-generated political ads.

The current state of political advertising using generative AI across major platforms

Papers, please: US Know Your Customer requirements for foreign entities

Last week we promised a deeper dive on additional sections of Biden’s Executive Order on AI; this week we’re taking a closer look at some of the directives in the Safety & Security section that seek to reduce threats from malicious foreign actors.

The Biden Administration is using the AI Executive Order (EO) to further prohibit malicious foreign actors from accessing advanced AI. In one of the most detailed sections of the executive order on Safety and Security, Biden calls on the Secretary of Commerce to propose know-your-customer (KYC) regulations for AI cloud computing companies within 180 days. KYC requirements are standard practice in finance; regulators require new customers to provide detailed personal and financial information before opening accounts to prevent fraud, money laundering, terrorist financing, and a host of other crimes.

The EO applies similar logic to advanced AI models, proposing that US-based cloud companies, like Amazon, better identify customers using their large GPU clusters to train AI models. Specifically, they should verify the identification of any foreign person attempting a large AI model training run and submit reports to the Commerce Department about those attempts.

The U.S. is clearly seeking to prevent foreign adversaries from training and deploying powerful AI models that could be used for military purposes or to commercial advantage. The Biden administration’s chip export controls, which restricted China’s access to the most advanced GPU chips, were an initial attempt to ensure U.S. AI supremacy and reduce the risk of foreign threats. But with U.S. cloud companies offering these chips for rent, there’s an obvious loophole – foreign governments could train their models on cutting-edge GPUs within the U.S. using friendly corporations as proxies. By forcing these cloud companies to enact strong KYC standards, the U.S. will make it harder for such attempts to be successful.

Overall, this is a smart directive. Some might argue this increases the probability of regulatory capture by adding cost and operational complexity that startups wouldn’t be able to handle, but given how narrowly targeted the measure is, the costs of providing this extra transparency are minimal. It should make it harder for bad actors to find vulnerable or willfully ignorant cloud GPU providers to train and deploy their AI models on cutting-edge hardware.

We won’t be able to tell AI generated voices from real ones soon

Synthetic audio took a step forward this week, with researchers from AI startup Koe releasing code that allows users to generate synthetic voices in milliseconds. This means that instead of needing to pre-record synthetic audio recordings, these tools can create artificial voices with such precision and speed that they are virtually indistinguishable from human voice, and do so in real-time. There are plenty of interesting positive use cases for this (e.g., real-time language translation), but its potential for misuse is significant.

We’ve written in the past about the unique challenges deepfake audio recordings present, from detection difficulties to their ability to be distributed via more vulnerable channels. Now that synthetic voice can be generated in real-time, voices can be cloned to impersonate individuals in contexts that require spontaneous answers, such as personal phone calls in which pre-recorded answers would be easily detected.

Unlike AI generated video which is still in the uncanny valley, convincing voice deepfakes are here now. We’ve already seen them deployed in attempts to influence elections and commit fraud; the potential for mischief will only increase from here.

In the short term, the best opportunity to curb abuse by malicious domestic actors would be for the FCC to act quickly, clarifying that audio deepfakes are subject to the Telephone Consumer Protection Act (TCPA). Longer term, as we move into a world in which voice may no longer be a reliable indicator of a person’s identity, policymakers and companies will have to grapple with alternative possibilities for identity verification as well as raise public awareness about the capabilities and dangers of synthetic voice technologies.

Of Note

Copyright

AI companies have all kinds of arguments against paying for copyrighted content (The Verge)

OpenAI promises to defend business customers against copyright claims (TechCrunch)

Adobe Stock is Selling AI-Generated Images of the Israel-Hamas Conflict (PetaPixel)

Society

How AI fake nudes ruin teenagers’ lives (The Washington Post)

For Hollywood, AI is a threat. For these indie filmmakers, it’s a lifeline (LA Times)

The UN Hired an AI Company to Untangle the Israeli-Palestinian Crisis (WIRED)

Inside the EU’s quest to control global AI politics (POLITICO)

Technology

Announcing Grok (x.ai)

Tech Start-Ups Try to Sell a Cautious Pentagon on A.I. (The New York Times)

Google Photos' Magic Editor will refuse to make these edits (Android Authority) - Magic Editor cannot edit human faces and body parts, photos of ID cards, receipts, and more.