This week, NYC Mayor Eric Adams is sending deepfake phone calls to constituents; researchers were able to easily break AI models’ safety guardrails; and big developments in semiconductor export controls could have implications for domestic and international companies alike.

Do you have a friend or colleague who might be interested? Forward this over and tell them to subscribe!

Mayor Adams says nĭ hăo

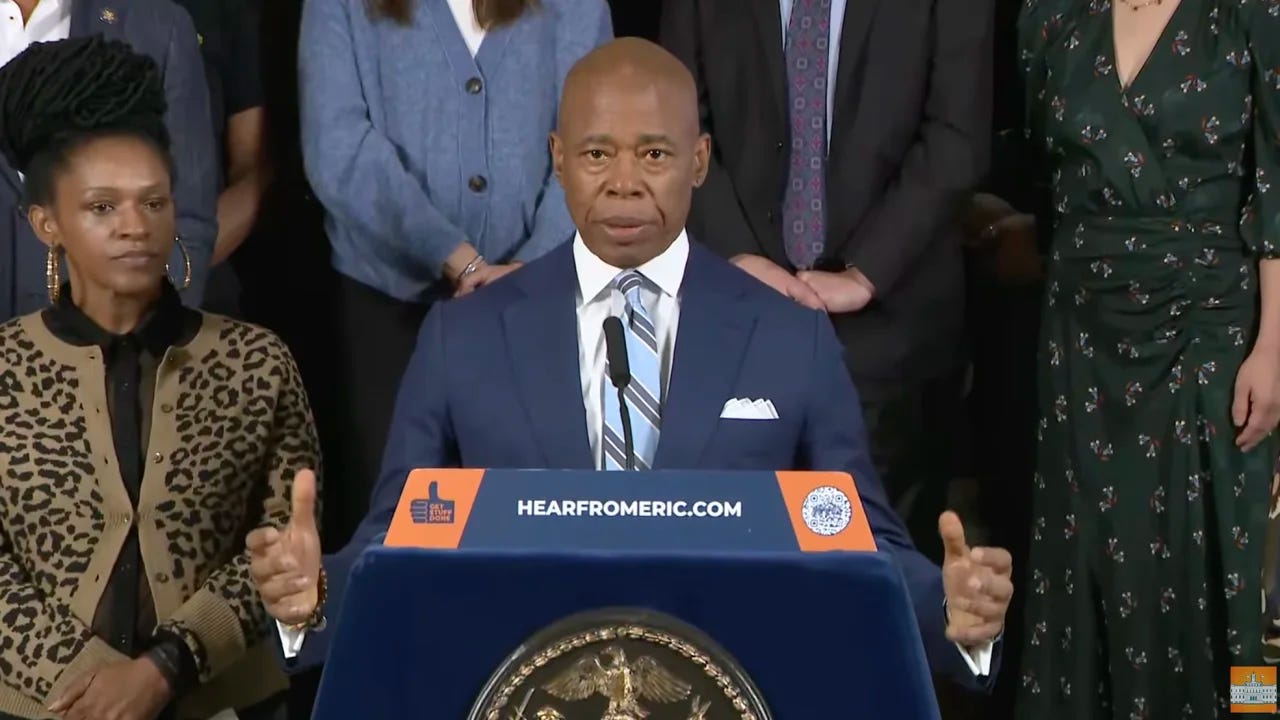

New York City’s mayor has found time in between doing the second toughest job in America and rolling out a new AI plan to become a polyglot.

Mayor Eric Adams has been an eager technology adopter since taking office, championing the city as a tech hub, taking his first paycheck in office in Bitcoin, and generally courting the tech community. He’s been similarly bullish on AI, this week rolling out the city’s Artificial Intelligence Action Plan alongside a chatbot that connects business owners with helpful city information.

The press conference announcing the AI plan and bot took an unexpected turn, though, sparking controversy when Adams announced that as part of his embrace of AI tools, he had been making phone calls to constituents using an AI model to produce deepfake versions of his voice in various languages.

The deepfake calls apparently went out to “thousands” of residents in Mandarin, Yiddish, Spanish, Cantonese, Haitian Creole, and possibly more languages. This is a puzzling and gimmicky use of synthetic audio because the calls were simply relaying information about municipal job fairs. Why would these calls need to sound like the mayor unless he's aiming to enhance his political appeal to specific demographics?

Even if this was just a publicity stunt, it raises interesting questions about how existing laws might apply to this use case. Unlike the relatively new problem of distributing AI-generated video over social networks, making calls with an “artificial voice” over wireless networks has been regulated since 1991, when lawmakers were concerned about robocalls employing nascent text-to-speech technology. In response to such calls and robocalls in general, Congress passed the Telephone Consumer Protection Act (TCPA), which contains the requirement that any caller using “artificial or pre-recorded voice” to a mobile phone number must have prior consent from the recipient.

The key question is whether the term “artificial voice” is applicable to Mayor Adams’ deepfakes. On one hand, the voices on the call are plainly artificial (the A in AI is, after all, “artificial”), but on the other, Adams’ real voice was used to generate the translations. It will either fall to the FCC to make this definition explicit or for similar cases to be litigated in court under TCPA’s private right of action. If the newly minted Democratic majority on the FCC wants to curb the threat of deepfake calls, especially ahead of the 2024 election, Chairwoman Jessica Rosenworcel should probably take a close look.

Researchers easily break “safe” models

To avoid regurgitating the worst of the internet, most widely used large language models go through an “alignment” process, in which the models are taught to be helpful, positive, and avoid “unsafe” subjects like racism, violence, and how to build a bomb. The rules and methods used for alignment vary, but generally involve having humans and automated systems teach the model to distinguish between good and bad outputs.

When models like ChatGPT emerge from the training and alignment processes, they’re akin to a Swiss army knife - adept at areas as varied as text summarization, art history, programming, and more. For some use cases, the broadly capable model can be too expensive, unfocused, or simply tonally inappropriate for that domain. In those instances, a developer or deployer might turn to a process called “fine-tuning”, where additional specific examples are fed to a model to refine its output.

For instance, let’s say a company is creating an accounting assistant for German bookkeepers. The company’s team could fine-tune an open model like Meta’s Llama 2 to focus only on the professional German accounting terminology it needs to know, by feeding it examples of proper and improper bookkeeping details that the company creates itself. This increases the reliability of the assistant’s output and lowers costs by getting good results without the use of costlier, cutting-edge models like GPT-4.

Fine-tuning is a valuable method, but it turns out it’s not without its own set of risks. Last week, a research team from Princeton and the University of Virginia announced that in the course of fine-tuning large language models, they were able to easily break the models’ basic safety alignment, causing the models’ “harmfulness ratings” to skyrocket by more than 80%. Alarmingly, even benign fine-tuning also could compromise the model safeguards. The hypothetical German bookkeeping AI startup could inadvertently ship a product that offers dangerous or controversial information just by tweaking the model to use professional terminology.

The clear tradeoffs between safety, power, and transparency will become even more apparent as these models’ capabilities advance. The concept of “red-teaming” AI models – letting hackers try to uncover their flaws through adversarial testing – is often mentioned by both AI companies and government agencies as an essential prerequisite for deploying a model to the public. But as these researchers have shown, testing the model by itself is inadequate if the model will be empowered after the fact through additional capabilities such as fine-tuning or internet connectivity, because any safety protections can quickly break down.

Therefore, safety evaluations for large AI models must consider the model as one component within a broader system, assessing interactions among models, users, and the external environment. This holistic approach aligns with regulatory precedent for other complex real-world technologies. For example, the FDA doesn’t permit pharmaceutical companies to release a new medication based solely on its effectiveness for a specific symptom. Instead, they mandate drug interaction tests during clinical trials and insist on post-release safety monitoring. Regulators would be well advised to follow a similar approach.

U.S. limits chip exports while China ramps up R&D

There’s major regulatory action in the semiconductor sector. The U.S. government is ratcheting up measures to restrict China’s access to semiconductors that power sophisticated AI models and military technologies. Large U.S. chipmakers have been lobbying against these new restrictions for months, and the limits are expected to have significant implications for Nvidia, which generates almost a quarter of its revenue from Chinese data centers.

The Atlantic also reported that the government may be considering further restrictions on general-purpose AI technology, which would have far-reaching effects on companies releasing open-source models (like Meta). Amid these export control disputes, Chinese tech giants like Baidu are ramping up their AI R&D, with Baidu claiming that their newest model matches the capabilities of OpenAI’s GPT-4.

Of Note

Government

How a billionaire-backed network of AI advisers took over Washington (POLITICO)

Sen. Schumer’s next Innovation Forum is coming next week, with startups and VCs in attendance (Axios)

Government Use of AI (AI.gov) Federal departments have compiled 700 AI use cases across the government

No Fakes Act wants to protect actors and singers from unauthorized AI replicas (The Verge) A new bill preventing the creation of a deepfake of a real person without consent unless part of news, civic, parody, and other purposes. (Oddly, it excludes sports broadcasts from the restrictions.)

The Inigo Montoya Problem for Trustworthy AI (Center for Security and Emerging Technology)

SEC Head: Financial Crash Caused by AI 'Nearly Unavoidable' (Vice)

Technology and Industry

Hollywood's AI issues are far from settled after writers' labor deal with studios (CNBC)

Stack Overflow lays off over 100 people as the AI coding boom continues (The Verge)

Waymo Cuts Staff in New Round of Layoffs (The Information) Another layoff at Google’s self-driving car division.

Deepfake Porn Is Out of Control (WIRED)

Maybe We Will Finally Learn More About How A.I. Works (The New York Times) Stanford researchers ranked popular AI language models like GPT-4 and Meta’s LLaMA 2 by their transparency.

AI Chatbots Can Guess Your Personal Information From What You Type (WIRED)